Artificial intelligence is changing how businesses operate and impacting certain practices that have often become a heated topic of discussion by ethicists who believe that algorithms have limitations and may produce harmful results if otherwise unchecked.

One of these controversial areas is the recruitment and hiring process within organizations as recent studies have shown that, even though AI is a promising tool that increases efficiency and objectivity, it may also be inadvertently creating new forms of discrimination and perpetuating existing biases.

Study Found That AI Systems Discriminated Candidates Because of Their Name

Research from the Royal Institute of Technology based in Stockholm recently uncovered some surprising patterns when analyzing AI hiring. Contrary to what was expected, the study, led by Celeste de Nadai, discovered that current models have some unexpected and obvious biases that come to the surface when evaluating and selecting candidates.

The research project examined the output of various large language models (LLMs) including Google’s Gemini-1.5-flash, Mistral AI’s Open-Mistral-nemo-2407, and OpenAI’s GPT4o-mini.

Researchers found that candidates with Anglo-Saxon names received lower ratings than the rest when being evaluated for a software engineer job position.

Also read: 100+ Artificial Intelligence Statistics You Need to Know

The study was quite rigorous and involved 4,800 inferences, which are requests sent to the model to obtain a conclusive answer. It also modified temperature settings for the models and included 200 different candidates divided equally between men and women and grouped into four different cultural groups.

De Nadai theorizes that this bias against men with Anglo-Saxon names might represent an overcorrection of previous biases identified in earlier studies – though she acknowledges it is too early to tell that this is the exact issue at hand.

A Growing Percentage of Companies is Embracing AI for Hiring Purposes

The embracement of AI hiring by organizations has already gone quite far as data from a recent survey conducted by IBM found that 42% of the corporations surveyed across the globe are already screening candidates by using AI technology while 40% are actively considering the implementation of AI-powered recruitment tools.

The kind of tools used for this process vary widely and go from CV scanners to the creation of screening tests.

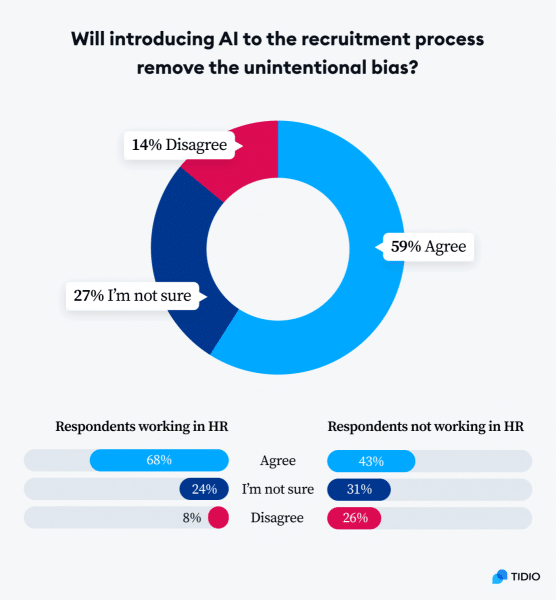

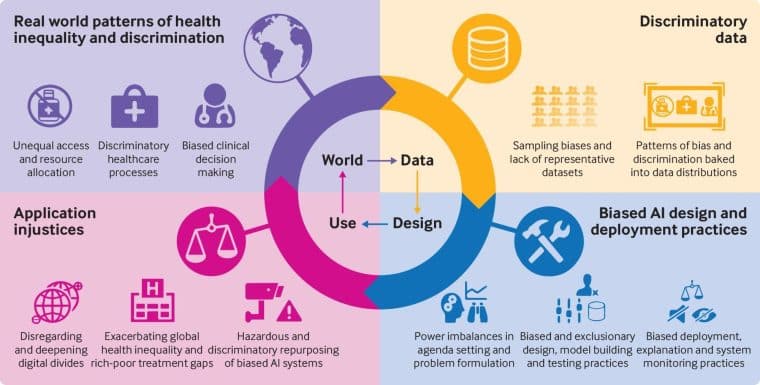

Organizations hope to minimize human bias in the recruitment process as much as possible. However, the evidence is now suggesting that these systems may actually either create new biases or amplify existing ones.

Hilke Schellmann, assistant professor at New York University and the author of “The Algorithm: How AI Can Hijack Your Career and Steal Your Future” warns that the greatest risk these tools pose is not job displacement but rather preventing qualified candidates from securing positions in the first place.

A study that included interviews with 22 different professionals in the field of talent sourcing and human resources management identified two prevailing biases: “stereotype bias” and “similar-to-me bias.”

These two may have leaked to existing AI models and are now embedded in their decision-making process and could create a vicious cycle that could be difficult to break.

The problem compounds when AI systems are asked to make inferences to determine certain variables.

For example, if experience is considered a positive factor, candidates with more years of experience in a certain field or job position may be favored even though the quality of that experience may not be as good as that of other candidates with fewer years in the field but a much richer background.

Four Steps to Reduce AI Bias in Hiring

Addressing AI bias will be critical to ensure that the technology is implemented fairly and adequately. However, achieving this is no easy task.

Sandra Wachter, professor of technology and regulation at the University of Oxford’s Internet Institute, emphasizes that unbiased AI is not just ethically necessary but also economically beneficial.

Wachter developed a tool called the Conditional Demographic Disparity test that aims to assess AI models and identify existing biases. A handful of large corporations have already used the test including Amazon (AMZN) and IBM.

“There is a very clear opportunity to allow AI to be applied in a way so it makes fairer, and more equitable decisions that are based on merit and that also increase the bottom line of a company.”

Wachter and other researchers have made the following recommendations to companies to ensure that their algorithms are free from bias or that any prevailing biases are minimized as much as possible.

- Training HHRR Personnel: Organizations need to implement structured training programs for HR professionals focused on information system development and AI. This training should cover AI fundamentals, bias identification, and mitigation strategies.

- Improved collaboration between HR professionals and AI developers: Companies should create integrated teams that include both HR and AI specialists to bridge communication gaps and align their efforts.

- Using more specific datasets: the development of culturally relevant datasets is vital to reduce biases in AI systems. This requires the careful curation of diverse and representative data that can help create more equitable hiring practices.

- Development of ethical standards for AI-powered hiring: there is a pressing need for comprehensive guidelines and ethical standards governing the use of AI in recruitment. These should promote transparency and accountability in AI-driven decision-making processes.

Survey Founds That Candidates See AI as More Biased Than Humans

A survey from the American Staffing Association conducted in 2023 found that 43% of respondents believe that AI could be more biased than humans. This perception highlights the growing discontent of prospective workers about AI tools being used to assess their skills and fitness for job positions.

Moreover, the US Equal Employment Opportunity Commission has acknowledged the issue and has included the impact of AI in hiring processes as one critical issue to tackle by its four-year strategic enforcement plan.

Regulatory attention is needed to ensure that companies are both aware and willing to make the required changes to ensure equitable hiring opportunities for all candidates. Corporations are responsible for overseeing that AI recruitment tools are promoting rather than hindering workplace diversity and inclusion.

As organizations continue to embrace the integration of AI into their hiring processes, they should stay focused on implementing solutions that enhance current practices rather than deepening existing issues like racial or cultural biases. Addressing these challenges will be critical to promote the widespread adoption of these tools and increasing productivity in talent sourcing departments.