The former head of Binance, Changpeng Zhao (CZ), issued a warning to the crypto community regarding the proliferation of deepfake videos targeting crypto investors primarily.

The founder of the crypto exchange, who was recently released from prison after he was found guilty of violating US anti-money laundering laws, emphasized on an X post that fake videos of him were circulating on social platforms.

CZ has been active on his X account since September 27 after he served four months in prison. On Thursday, he confirmed his attendance to the Binance Blockchain Week – an event scheduled for October 31-31 in his “personal capacity” while Friday’s post has been the most serious remark he has made since he got out of jail.

There are deepfake videos of me on other social media platforms. Please beware!

— CZ 🔶 BNB (@cz_binance) October 11, 2024

He noted that the firm has been doing very well during his absence.

CZ and Binance were hit with penalties as authorities from the United States found that the company had facilitated money laundering activity and helped nations and entities violate sanctions imposed by the country.

The exchange was hit with a staggering $4.3 billion fine while its former CEO was forced to pay $100 million in penalties as well. CZ also stepped down and showed remorse for his negligence.

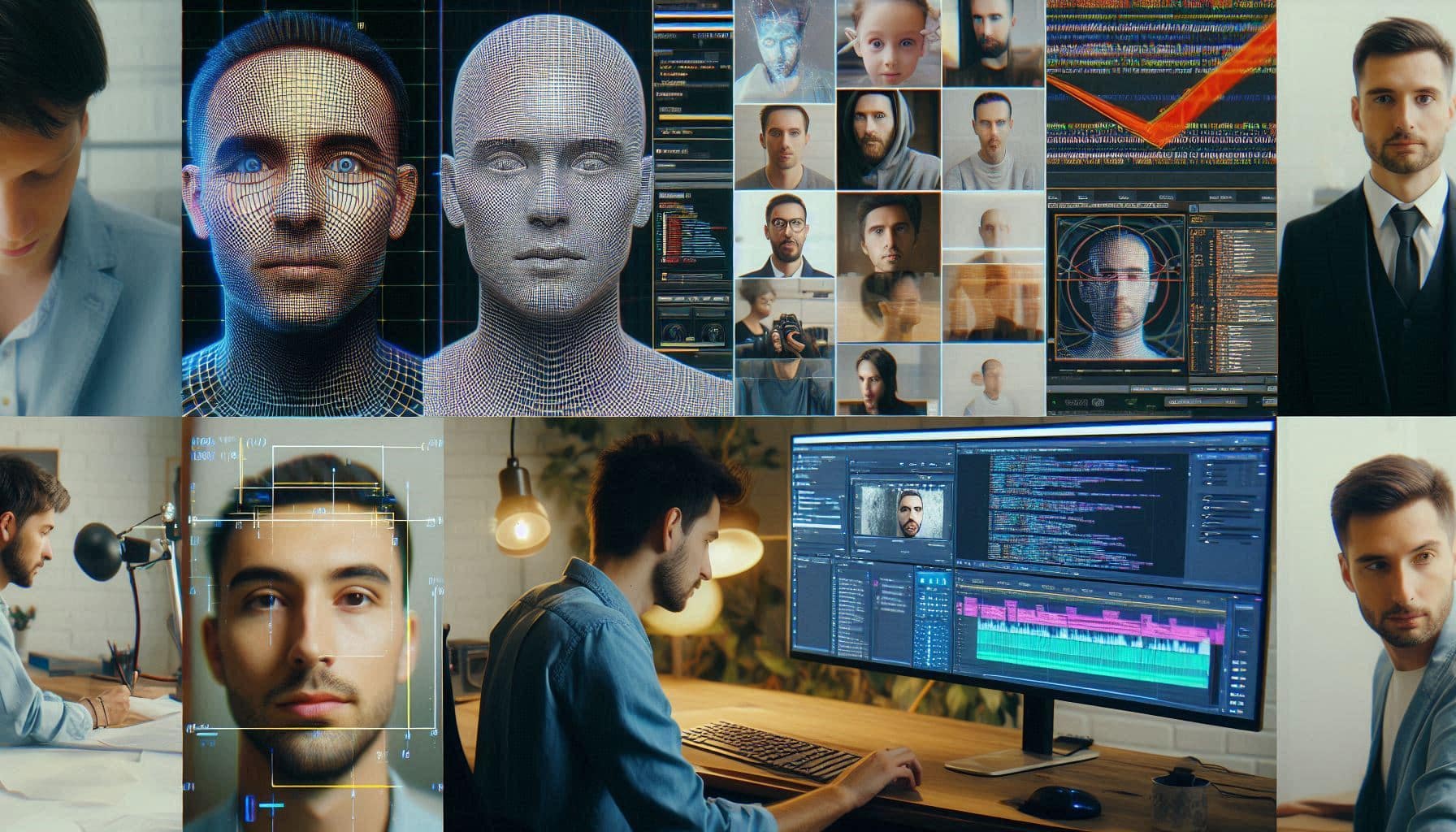

Deepfakes Use AI Technology to Create Fake Footage

Digital deception via deepfakes has become a trend lately. These videos are categorized as “synthetic media.” They commonly use existing footage and replace the audio and the head and mouth movement by using artificial intelligence (AI).

The creation of these convincing fakes typically involves the use of deep neural networks, particularly generative adversarial networks (GANs). The process includes data collection, AI model training, face swapping, voice synthesis, and refinement to produce increasingly realistic results.

They are typically used to promote scams and fraudulent ventures and statistics show that fraudsters are making thousands of dollars by creating videos of public figures like Elon Musk, Donald Trump, and Taylor Swift to promote cryptocurrencies.

Charles Hoskinson, another prominent crypto figure and the creator of Cardano, emphasized that deepfakes have gotten much more sophisticated and they are proliferating at an alarming rate.

He predicts that, in a couple of years, the public will find it quite difficult to differentiate between a deepfake piece and a real video depicting an individual.

Elliptic: CryptoCore Reaps $5 Million per Quarter with Deepfakes

Other prominent characters in the crypto space have also had their images used to create deepfakes to scam the public. Some of the most frequently depicted figures include the founder of Microstrategy, Michael Saylor, the head of Ripple, Brad Garlinghouse, and the co-founder of Ethereum, Vitalik Buterin.

Videos featuring these individuals provide credibility to whatever claim or offering the video is promoting, which increases the chances of deceiving the victims.

The blockchain research company Elliptic found that crypto scams involving deepfakes tend to follow a similar playbook: they invite unwary investors to transfer their digital assets to a specific crypto wallet with the promise that they will receive some handsome rewards in exchange.

A group known as “CryptoCore” has reportedly earned millions by defrauding thousands of investors. The firm estimates that they have made over $5 million in just three months with this kind of campaign.

The financial impact of these scams is substantial and growing. During SpaceX’s integrated flight test in June, an estimated 50 YouTube accounts were hijacked, resulting in 500 unauthorized transactions and the theft of $1.4 million.

In another instance, a Trump-Musk deepfake scam identified by Elliptic generated around $24,000 in revenue during a short period. These figures likely represent only a fraction of the total losses that investors have incurred as many of these scams go unreported or undetected.

Crypto and Politics Are the Most Appealing Subjects for Deepfakes

As the US presidential election approaches, there’s growing concern about the use of deepfakes to spread fake news, steal crypto, and shape the public’s perception of candidates.

The attendance of Donald Trump and Robert F. Kennedy Jr. during the Bitcoin 2024 Conference in Nashville provided deepfake creators with valuable materials for their videos. Additionally, Vice President Kamala Harris is reportedly set to soften her stance on blockchain technologies, which could potentially make her a target for deepfake scammers as well.

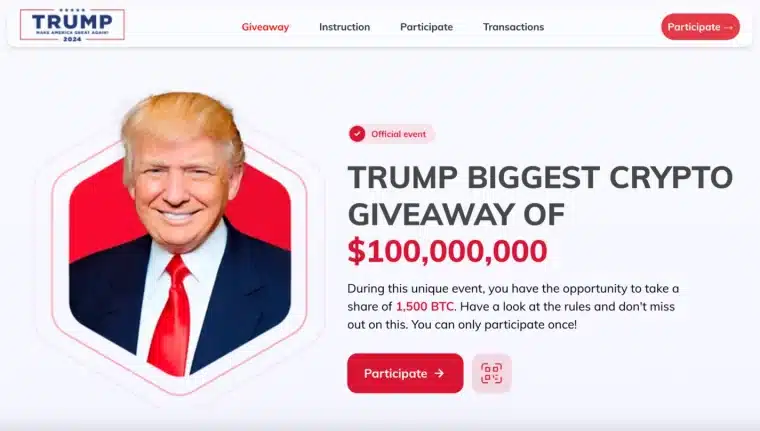

Elliptic uncovered a website that bears the “Make America Great Again” logo and the image of Trump. The site claims to be the former president’s biggest crypto giveaway and uses deepfake pieces to convince visitors.

Investors are promised that they will receive twice the amount they donate to the campaign from a $100 million pot.

Beyond politics, Avast’s investigation into CryptoCore revealed that the most exploited topics for AI-powered deepfake scams included SpaceX, MicroStrategy, Ripple, Tesla, BlackRock, and Cardano. These topics often align with current events or popular trends in the crypto and tech industries.

How Can Investors Protect Themselves from Deepfakes

As the threat of deepfake scams continues to grow, it’s crucial for the crypto community to stay vigilant. Experts recommend various strategies that could help investors avoid falling prey to these scams, including:

- Verifying information from multiple reliable sources before making investment decisions. Being skeptical of giveaways or offers that offer exaggerated rewards.

- Checking official channels to verify announcements or promotions.

- Using deepfake scanning software to test suspicious videos and identify their veracity.

As AI technology continues to advance, new tools are being developed to detect deepfake videos. Although they are not 100% effective, they often provide insights about the video’s integrity and odds that the footage is real or not.

A handful of projects are exploring the possibility of using blockchain technology to verify the authenticity of videos and images by creating an immutable record of original content.

Investors should be wary of poor lip-syncing, unnatural voice patterns, or inconsistencies in video quality as these clues often reveal if the footage is a deepfake. Using public blockchain explorers to verify transactions and wallet addresses can also help ensure the legitimacy of the claims.

As AI technology continues to advance, the sophistication of deepfake scams will likely increase. This constitutes a challenge for the cryptocurrency industry, regulators, and law enforcement agencies.

Software that uses AI-powered tools to detect deepfake videos is being refined to identify and remove this type of content from popular platforms like YouTube. However, raising the public’s awareness about the threat of tampered footage is crucial to reduce the financial harm caused by this type of scam.