Email marketing is a regular source of frustrations for many businesses. The fact is, a lot of people are tired of getting emails from businesses.

So as someone sending emails on behalf of a business, you’re probably on the lookout for new ways to improve the performance of your emails. You may, for example, try using an email subject line testing tool to try and improve your email open rates.

But how accurate are these email testing tools, exactly? How much stock should you put into the ratings they give your subject lines?

Comparing Email Subject Line Testing Tools

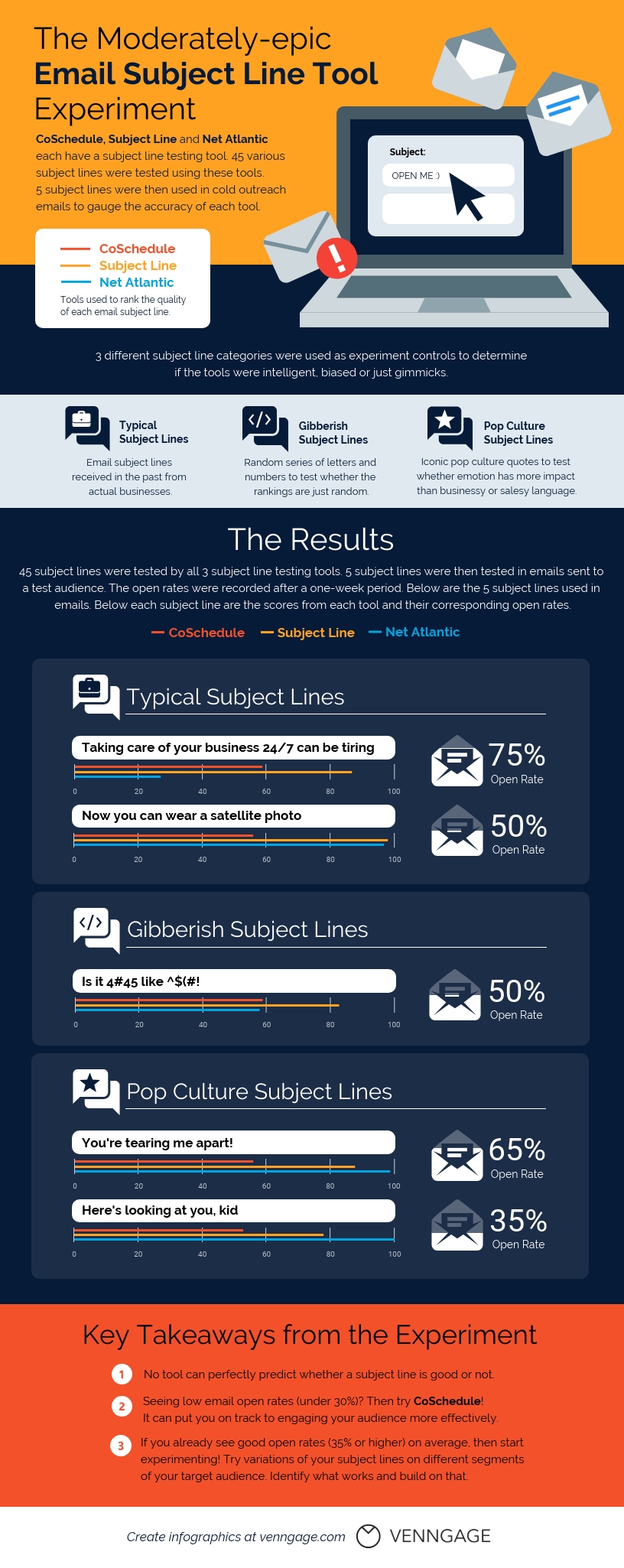

Venngage did an email subject line study testing three of the top email subject line testing tools on the market: CoSchedule, Subject Line and Net Atlantic.

They tested 45 different subject lines using each tool, to see the rating each tool gave them. The types of email subject lines they tested fell under 3 broad categories:

- “Real” or “typical” email subject lines (real subject lines that the writer had received from businesses)

- Gibberish subject lines (containing random letters and numbers to see if the tools could identify gibberish, or if the tools were just gimmicks)

- Pop culture subject lines (pop culture quotes and references to sound more relatable)

Five subject lines were then sent to test audiences to collect the open rates.

This infographic by Venngage Timeline Maker compares the subject line scores against the actual open rates they got:

Overall, CoSchedule’s scores were the most consistent with the actual open rates. Their tool bases the score on character and word range, word lengths, specific words used, emojis, and similar characteristics.

Meanwhile, Net Atlantic granted the lowest score to the email subject line that got the highest actual open rate, and awarded the highest score (100%) to the email subject line with the lowest actual open rate. I’ll let you draw your own conclusions there.

Subject Line’s score were mostly inconsistent with the actual open rate.

What can we take away from this study?

Certainly, one of the main takeaways is that email testing tools should be used with a grain of salt. But in the defence of the people who created these tools, there are many factors that contribute to the likelihood of your emails being opened.

Some of those factors include:

- How personalized your subject lines are (personalized email subject lines can increase open rates by up to 50%)

- When you send your emails

- How you’ve segmented your emails/how relevant the subject line is to your audience

- If your subject line contains words that trigger spam filters like “free” or “sale”

Ultimately, you will have to run tests yourself to see what gets the best results from your audience. Hey, you might even consider ditching subject lines altogether where appropriate (a study found that emails without a subject line were opened 8% more then those with a subject line).

What do you think? What tests have you run to improve your email open rates? What tools have you found to be helpful?