In today’s world, artificial intelligence (AI) is a behind-the-scenes player, turning our online spaces into lands of make-believe. Thousands and thousands of articles are being crafted without human hands, and ads are misleading people, showing us the incredible yet worrying aspects of AI in both creating content and advertising.

This story of progress mixed with deception shows us how AI, once thought of as a tool for the future, now plays a massive role in spreading falsehoods on the internet. It challenges us to distinguish real content from fake.

Let’s explore the mystery of how AI ads and AI-generated content are changing Google Search, making us question: Can we rely on the AI we can’t see, or are we simply being tricked in its elaborate digital fantasy?

AI: From Dream to Deception

One of the reasons content creators like generative AI, mostly large language models (LLMs) like ChatGPT, is because it can quickly produce lots of new material. This increase in AI-generated content is impressive, but it also makes people wonder if the content is trustworthy and real – and with good reason.

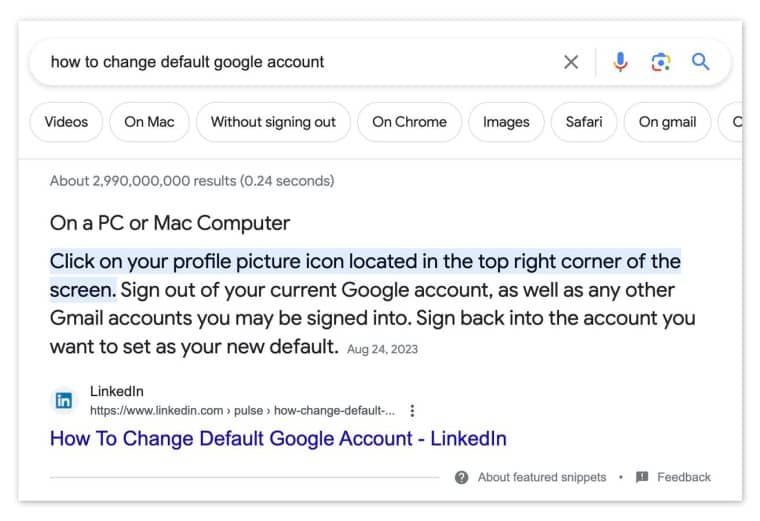

A good example of the issues related to AI-generated content is the search result for “how to change default Google account”.

A person named Morgan Mitchell on LinkedIn wrote more than 150 articles, all of which seemed useful, formatted in a reader-friendly format, and even included customer-service phone numbers.

It turns out that there was no Morgan Mitchell. Although they were supposedly a content manager at Adobe, Adobe confirmed that no one by that name was affiliated with the company.

The LinkedIn profile was fake, AI-generated, and designed to create content that Google would rank highly in its search results. In fact, the article on changing the default Google account had a customer service number that does not belong to Google – either inserted to con unsuspecting users or entirely hallucinated by the AI.

This situation reveals the darker side of using AI to create content. While AI has the capacity to produce informative and appealing content, it can also be misused to spread false information and scams.

The story of Morgan Mitchell emphasizes the need for users to be wary of content that comes from AI that can imitate human writing effectively. As AI gets better, telling the difference between real and AI-generated content will get harder, requiring more advanced ways to spot the fakes and a discerning approach from internet users.

AI Ads: The Fine Line Between Innovative and Misleading

AI is also leading to deceptive advertisements. Google is struggling with malicious advertisements trying to impersonate trusted sites.

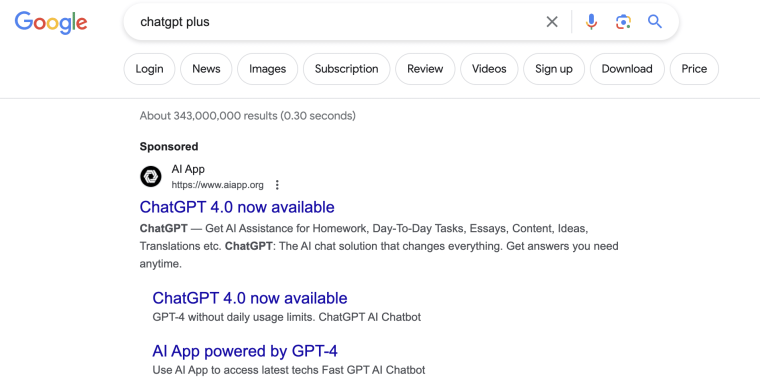

For example, when Googling “ChatGPT Plus”, the first result is an advert that surfaces above the genuine OpenAI options in search engine results.

This ad leads people to a non-OpenAI affiliated website (aiapp.org) charging for services that OpenAI actually provides for free – since it doesn’t actually seem to offer GPT 4. This might seem like a small one-off scam but it tricked a ton of searchers.

The issue is so prevalent that there’s been a spike in discussion around this website on social platforms such as Reddit. Users pointed out that the site is pretending to be an official upgrade site for ChatGPT, especially by using a design that closely resembles that of OpenAI.

One user mentioned that the first sign something was amiss was the absurdly poor responses received from the service. They had been using the standard, free version of ChatGPT to assist in refining a complex Python application they were developing.

However, when engaging with the aiapp.org site, they found the quality of responses to be comically inadequate across all versions offered, including 3.5, 4, or PaLM 2. They also emphasized that the scam site’s version 3.5 didn’t even come close to the real ChatGPT’s GPT3.5.

Many other Reddit users noted the difficulty in canceling the subscription once they realized that they paid for a service that was not actually OpenAI’s ChatGPT 4 Plus.

Users on Trust Pilot also reported struggling with being able to remove their payment information from the website, saying that they were unable to delete their card and that they still got charged after canceling their subscription.

This one scam site is far from the only impersonation scam that uses Google’s automatic advertising to squeeze money out of unsuspecting victims. This kind of trap is even more common in the crypto world. They pose as trusted finance platforms (often with extremely similar URLs) so that you connect your crypto wallet and before you know it, your wallet is drained.

Google’s Battle for Truth in an AI-Driven World

A Google spokesperson stated that Google is deeply committed to ensuring the quality and trustworthiness of its search results, highlighting that Google’s search outcomes are generally of better quality compared to other search engines.

This commitment is closely linked to their principle of E-E-A-T, which stands for expertise, experience, authoritativeness, and trustworthiness. This principle is key in how Google evaluates content created by AI, ensuring that the focus is on the quality of content rather than how it was created. As a result, AI-generated content that is accurate and useful is allowed in search results.

Furthermore, Google has developed systems to fight spam effectively, ensuring that 99% of search results are free from spam. This is done by listening to user feedback and continuously improving their search algorithms. Google says that it is strict about not allowing content that is mass-produced to cheat the search rankings and is active in removing ads that carry malware or aim to scam users, with billions of such ads being taken down every year.

Despite all this, it appears that AI content aimed at misleading users is prevalent. Users on Reddit are continuously criticizing Google for not addressing misleading practices like the ad for aiapp.org, since it is exploiting the popularity of AI technologies like ChatGPT.

AI Is a Double-Edged Sword – Don’t Let It Cut You

The main issue with AI’s involvement in creating and advertising content is that it has both positive and negative aspects. AI can lead to new and efficient ways of producing content, but it also creates opportunities for dishonest practices that can trick users. Cases of fake profiles and deceptive ads not only damage the trust of users but also make us question if the protective measures in place are enough.

Even though Google is dedicated to maintaining high-quality, spam-free search results, the rise of AI content designed to deceive users indicates a need for stronger protective actions. Google’s standards for evaluating content, known as E-E-A-T, are essential. However, as AI technology continues to develop, the methods to prevent its negative use must also improve.

For those who own businesses, create content, or simply browse the internet, the message is clear: Stay sharp and question what you see online. Ensure that what you’re reading or watching is coming from a trustworthy source.

As we move forward, working together to promote honesty, check our facts, and stick to high ethical standards will help us make the most of AI’s potential while keeping our digital space safe and reliable.